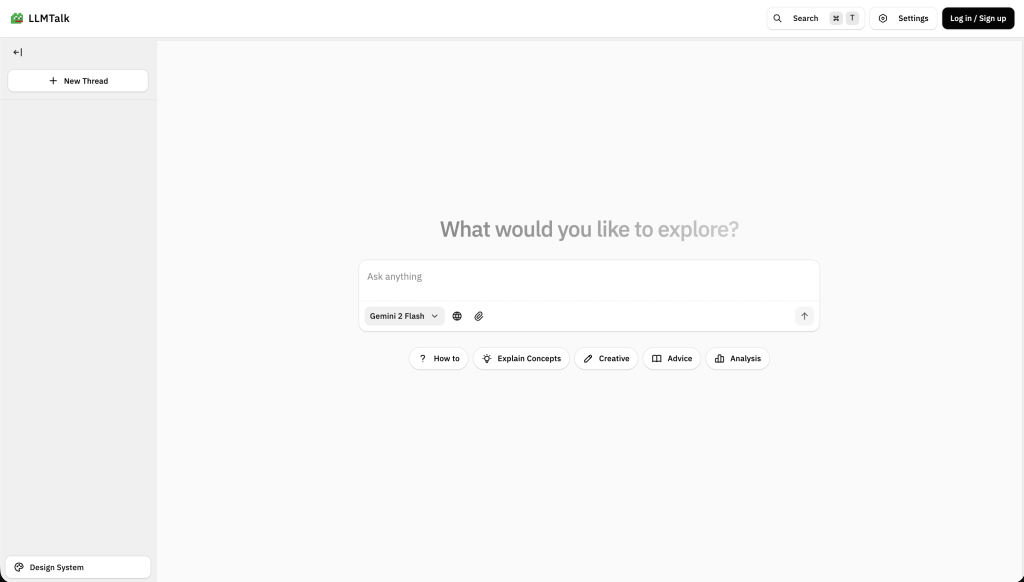

As AI models proliferate, switching between providers becomes tedious. LLMTalk is a unified chat interface that lets you interact with OpenAI, Anthropic (Claude), and Google Gemini from one place.

The Problem with Multiple AI Interfaces

Each provider has its own interface, API patterns, and quirks. Switching between them is inefficient. LLMTalk consolidates them into a single, consistent experience.

What Makes LLMTalk Special

Multi-Provider Support

LLMTalk supports:

- OpenAI (GPT-4, GPT-3.5)

- Anthropic (Claude)

- Google Gemini

Switch models without leaving the interface.

Flexible API Key Management

Two modes:

- System mode: uses environment variables

- Own mode: manage keys in-app

Useful for teams and personal projects.

Real-Time Streaming

Streaming responses with support for “Thinking” and “Reasoning” tokens, so you see progress as the model generates.

Persistent Chat History

Thread-based history with local storage, so conversations persist across sessions.

Custom AI Rules

Define custom instructions per conversation to shape model behavior.

Modern Design System

A documented design system at /design-system includes:

- Typography scales

- Color palettes (light/dark)

- Reusable components

- Animation patterns

- Modal templates

Technical Architecture

Built with Modern Web Technologies

Frontend Framework: Next.js 14 with App Router

- Server-side rendering

- Route-based code splitting

- Built-in API routes

Language: TypeScript

- Type safety

- Better developer experience

Styling: Tailwind CSS

- Utility-first CSS

- Responsive design

- Dark mode support

UI Components: Radix UI

- Accessible primitives

- Unstyled, customizable components

State Management: Zustand

- Lightweight

- Simple API

- Good performance

AI Integration: Vercel AI SDK

- Unified API for multiple providers

- Streaming support

- Built-in error handling

Key Features Implementation

Streaming Responses: Uses the Vercel AI SDK streaming API for real-time updates.Chat History: IndexedDB (via Dexie) for client-side persistence.Theme System: next-themes with system preference detection.Rich Text Editor: TipTap for markdown support and formatting.

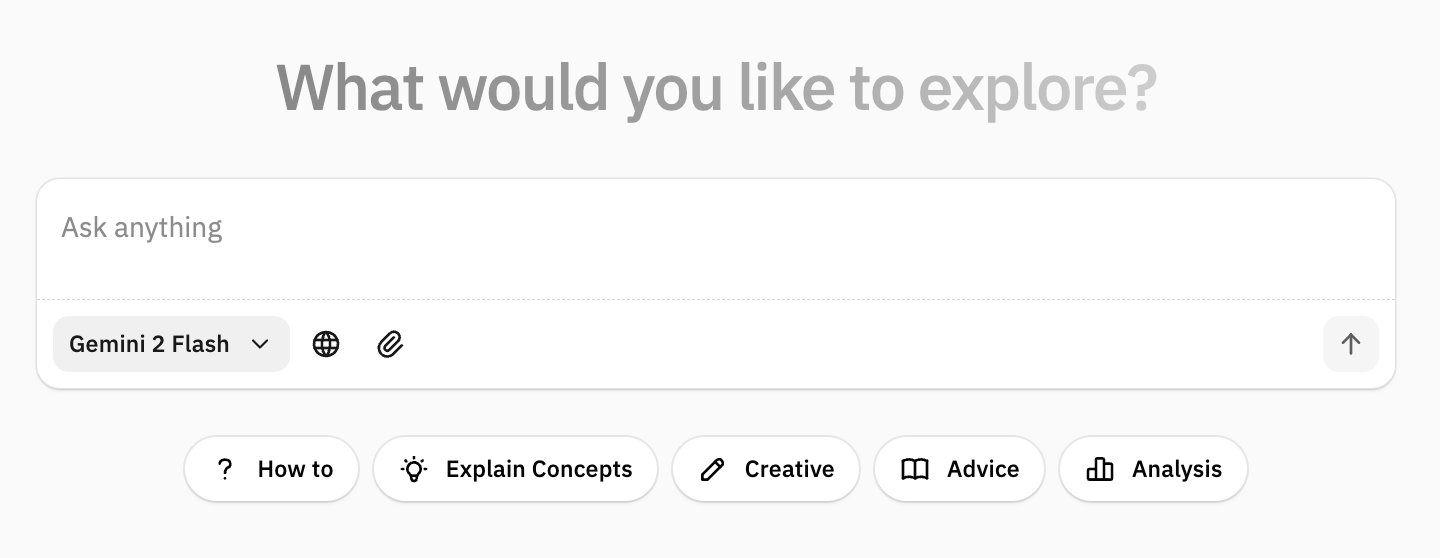

Design Philosophy

The design prioritizes:

- Simplicity: clean, uncluttered interface

- Consistency: unified experience across providers

- Accessibility: keyboard navigation and screen reader support

- Performance: optimized rendering and state management

Use Cases

- Comparing responses across models

- Development and testing

- Personal productivity

- Learning different model behaviors

The Future of AI Interfaces

As models evolve, unified interfaces become more important. LLMTalk demonstrates how to:

- Abstract provider differences

- Provide a consistent UX

- Support extensibility

- Maintain performance

Open Source and Extensible

The codebase is structured for extension. Adding new providers is straightforward, and the component system supports customization.

Conclusion

LLMTalk shows that a unified interface can improve the AI interaction experience. By consolidating multiple providers into one interface, it makes AI more accessible and efficient.Whether you’re a developer, researcher, or enthusiast, having one interface for multiple AI models streamlines your workflow and lets you focus on what matters: the conversation.

Try it: http://llm-talk-five.vercel.app/